# Weixin Mini Program Quality testing

Weixin Mini Program quality detection combines the startup performance and the [automated test capabilities of the cloud measurement service to do a comprehensive quality detection of the accessed Mini Programs.

From quality inspection report example we can see that quality inspection mainly checks Weixin Mini Program from the following four aspects:

- Startup performance

- Operational performance

- compatibility

- Network performance

# Quickly start quality inspection tasks

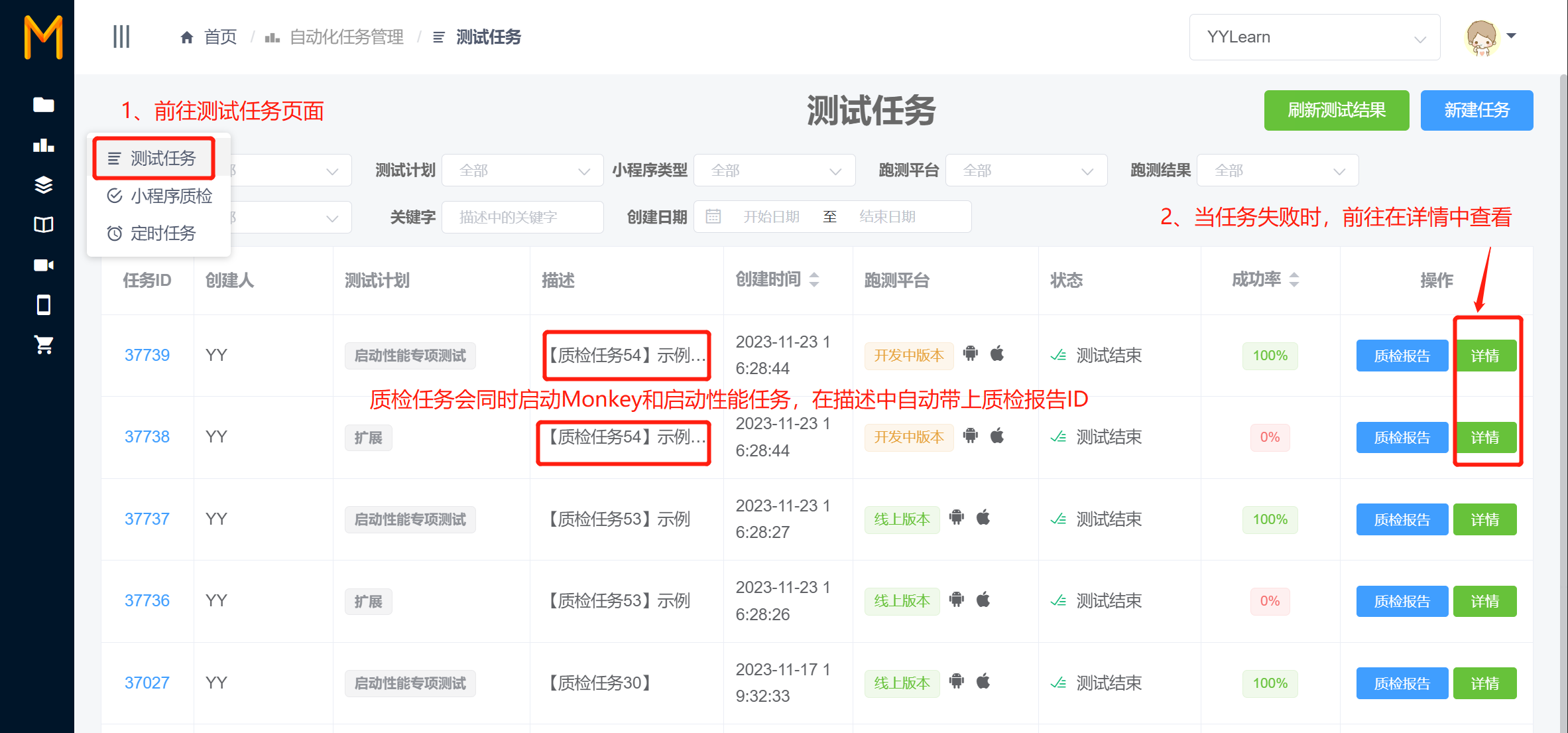

Users can enter the quality inspection task page in the "automatic task management" = > "Weixin Mini Program quality inspection" on the left navigation bar.Click the "New Quality Inspection Task" button in the upper right corner of the page to create a quality inspection task.

特别提醒:

质检任务中每选一个平台或一个机型,都会用 2台相同机型 的机器,分别跑测 自动化测试 和 启动性能 任务。

So each platform or model needs to run an automated test task (default 20 minutes of intelligent Monkey task) and a 20 minute startup performance test, totaling minus 40 minutes test duration

# Implement a failure screening guide

As mentioned above, the QA task uses the same machine to start the automated test task and the startup performance task. The QA report also relies on the test results of both tasks.

If the task execution fails, it is usually a real-world cause, and users will have more detailed hints on the test report page.

特别提醒:

启动性能的评估是参考We分析同小程序, 同机型冷启动 的结果作为参考对象。

如小程序线上访问人数较少,未找到同机型的对比值时,无法获取评估结果(报告展示为N/A)

# Rules for scoring quality inspection reports

# a general overview

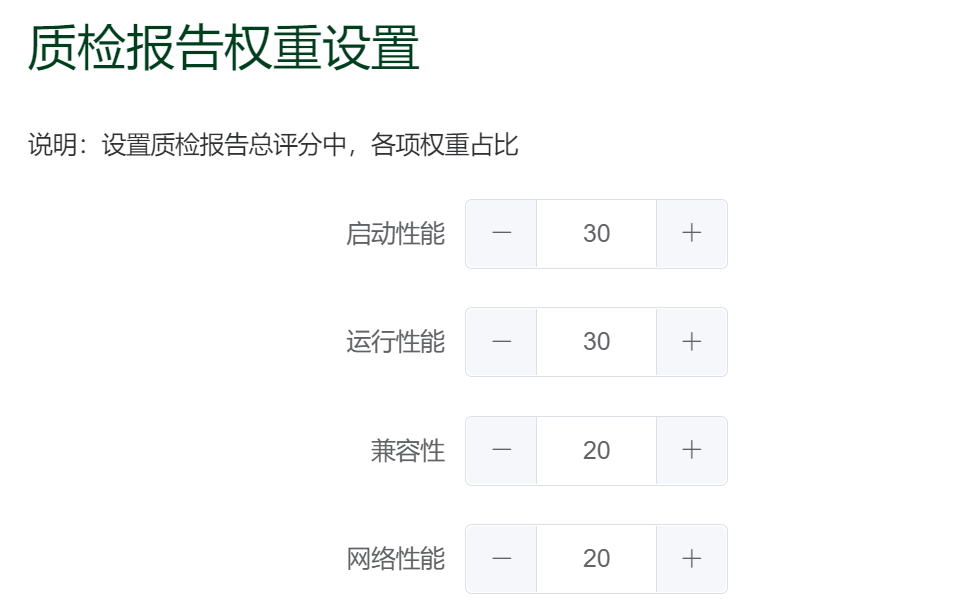

The cloud test will integrate the evaluation results of all aspects to get the Weixin Mini Program quality inspection evaluation results.Among them, the weights accounted for:

- Startup performance: 30%

- Operational performance: 30%

- Compatibility: 20%

- Network performance: 20%

For the above weights, users can adjust the weights according to the project's own conditions in Project Management > Run Performance Settings

# Startup performance

Cold start from **** Two of the most common pull-up Weixin Mini Program scenarios (requirement and downloaded code packages) analyze the time spent in the startup process during the three phases of package download, code injection, and first rendering, as well as the overall startup time.

Furthermore, cloud testing compared these three phases of data and overall time consumed data with We Analytics Weixin Mini Program online version large disk data for developers to compare.

Special Note: The final overall test results are evaluated against Weixin Mini Program cold-started downloadable packages .

# Operational performance

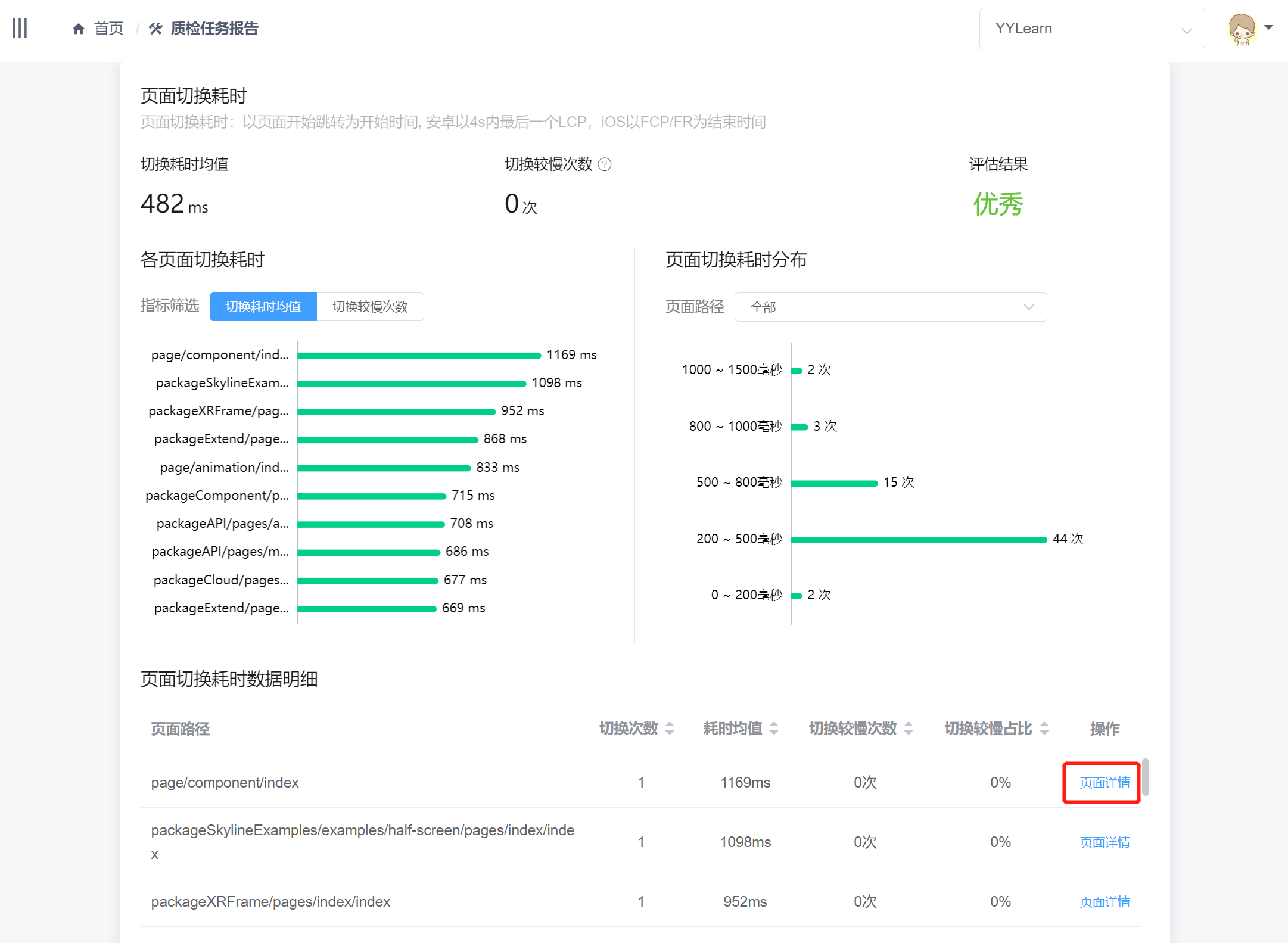

The performance analysis mainly consists of page switching time and runtime experience rating in two parts

# 1. Page switching takes time

Start the page jump as the start time, Android to 4s last LCP, iOS to FCP / FR as the end time.Note that and We analysis have different definitions of page switching time , which is theoretically larger than We analysis.

Page switching time can be intuitive reaction Weixin Mini Program run "Kardon" situation, Recommended switching time control within 2500ms .

The report details the average time spent switching pages, the pages and times they switch slowly, and the breakdown of the time spent changing pages

To make it easier for developers to check why pages are slow to switch,In the page details , all information about the page is specifically integrated, including whether the page is taken, exceptions (such as JSError, black and white screen), web requests, and experience ratings.

# 2. Running experience rating

Running experience scoring content mainly uses runtime performance analysisThe experience scoring capability in is checked in real time during Weixin Mini Program-END]] operation to analyze what might be causing the experience to be poor, to locate where the problem is, and to make recommendations for optimization

Running Experience Evaluates the performance of Weixin Mini Program from performance checks, best practices, and recommendations

- [Important] Performance checks: Check the number of WXML nodes, setData usage, script execution time, etc. Performance checks will directly affect the evaluation results of the running experience .

- [Slight] Best Practices: Check from HTTPS requests, CSS usage, deprecated interfaces, etc.Best practices have a slight impact on the evaluation of the operational experience.

- [Recommendations] Suggestions: Check from the picture display, the element click areas, etc.Note that the recommendations are for reference only and are not included in the evaluation results of the running experience score

# compatibility

Weixin Mini Program When running on any machine, you should avoid JSError or UI anomalies such as black and white screen and text overlap.

Inclusiveness is from these dimensions to help developers analyze whether there are JSError and UI exceptions when running Monkey.

- JSError: At run time, detect if the page has JSError

- UI exceptions: Check screenshots during runtime and find UI exceptions such as

black-and-white screenandblack and white screen

# Network performance

Weixin Mini Program The network performance of the larger impact on the user experience, such as network return error, may lead to Mini Program page display exception.

Network request congestion, it will also make Weixin Mini Program page become catten, bring bad user experience

The quality inspection report focuses on two dimensions: request result and request time evaluation of these two dimensions Weixin Mini Program network performance

# Analysis of request results

The request results analysis examines both the failure of the request and the number of requests:

- Request failure: Check the network request for failure, for failed requests, developers should pay attention to, because this is likely to cause Weixin Mini Program function exception

- Number of Requests: analyze the number of requests sent per page. For pages that send more requests, developers can consider merging some of the requests or caching the requested content to improve page performance

# Requests are time consuming analysis

Request Time Analysis mainly checks if the request is taking too long and recommends that all network requests return results within 1 second

In addition, cloud testing gave details of all request records

# I need help.

If you have any suggestions or needs, welcome to need help page, scan the code to join the cloud test official enterprise micro group, contact the group main feedback.