# Post-processing node

Post-processing nodes are a special type of rendering nodes that provide a set of specifications and life cycles to help developers quickly customize post-processing effects.

# Base class

All post-processing nodes should be derived from the base class RGPostProcessNode:

// Each post-processing Pass data

export interface IPostProcessPassData {

effect: Effect;

renderTarget: RenderTexture | Screen;

lightMode: string;

}

// Configuration common to all post-processing nodes

export interface IRGPostProcessNodeOptions {

renderToScreen?: boolean;

hdr?: boolean;

}

// Post-processing node base class

export abstract class RGPostProcessNode<

TInput extends {[key: string]: keyof IRGData} = {},

TOptions extends IRGPostProcessNodeOptions = IRGPostProcessNodeOptions

> extends RGNode<TInput,'RenderTarget', TOptions> {

public abstract onGetPasses(context: RenderSystem, options: TOptions): IPostProcessPassData[];

public onCreatedData(context: RenderSystem, materials: Material[], passes: IPostProcessPassData[], options: TOptions): void {}

public onFillData(context: RenderSystem, materials: Material[], passes: IPostProcessPassData[], options: TOptions): void {}

}

According to the introduction to the render node in the previous chapter, we can see that the post-processing node is actually a node that can customize the input and output RenderTarget**. In fact, most post-processing nodes will receive one or more RenderTexture is used as input, and finally output to RenderTexture or Screen, which also means that post-processing nodes can be cascaded in essence. From this perspective, for a simple post-processing process, it is actually a pipeline also may.

# Custom node

To customize a post-processing node, the most important thing is to master the three life cycles of the node. Here is the simplest Blit node as an example to discuss how to customize the post-processing node:

export class RGBlitPostProcessNode extends RGPostProcessNode<{sourceTex:'RenderTarget'}> {

public inputTypes = {sourceTex:'RenderTarget' as'RenderTarget'};

public onGetPasses(context: RenderSystem, options: IRGPostProcessNodeOptions): IPostProcessPassData[] {

return [{

effect: this.getEffectByName('System::Effect::Blit'),

renderTarget: options.renderToScreen? context.screen: new RenderTexture({

width: context.screen.width,

height: context.screen.height,

colors: [{

pixelFormat: options.hdr? Kanata.ETextureFormat.RGBA16F: Kanata.ETextureFormat.RGBA8

}]

}),

lightMode:'Default'

}];

}

public onCreatedData(context: RenderSystem, materials: Material[], passes: IPostProcessPassData[], options: IRGPostProcessNodeOptions) {

}

public onFillData(context: RenderSystem, materials: Material[]): void {

const srcTex = this.getInput('sourceTex') as RenderTexture;

if (srcTex) {

materials[0].setTexture('sourceTex', srcTex);

}

}

}

For this node, the first definition is the input type information {sourceTex:'RenderTarget'}, which declares that a render target is required as input.

Then there is the life cycle onGetPasses, which returns an array. The number of elements in the array indicates how many Passes there will be. Each Pass returns an Effect for rendering, a RenderTarget for output, and The lightMode for rendering. The node will select the RenderTarget of the last Pass as the total output of the node.

The life cycle onCreatedData will be executed after the post-processed Passes data is assembled. You can do some preparations here, such as setting macros for material, etc.

The last is the life cycle onFillData, which will be called before rendering after each execution to update the data needed for each frame. Here, the input texture is obtained in time and set in material.

Note that the number of elements in this

materialscomes from the number of Passes, and each Pass corresponds to a Material.

# Built-in node

At present, the small game framework provides some built-in post-processing nodes:

# RGBlitPostProcessNode

Copy node, copy the input texture sourceTex to the output.

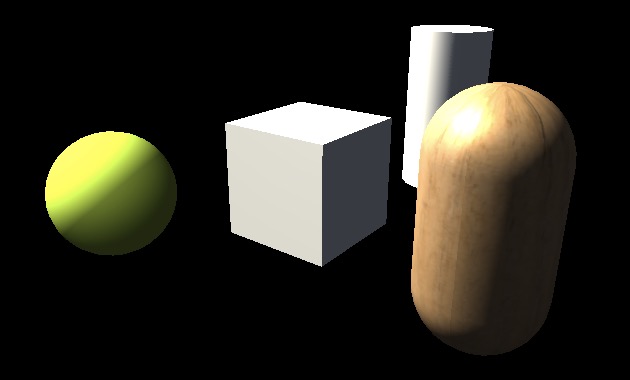

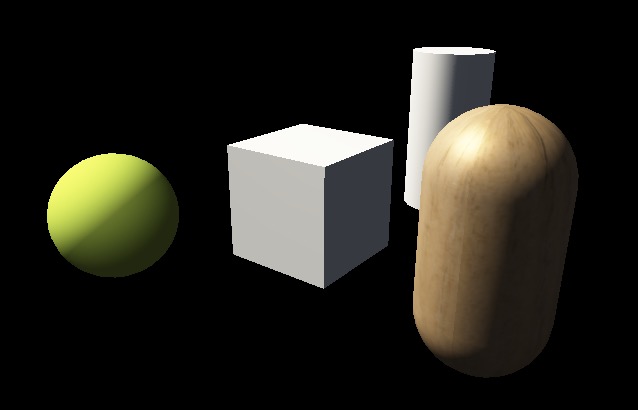

# ▌RGHDRPostProcessNode

Tone mapping node, do tone mapping output to the input texture sourceTex.

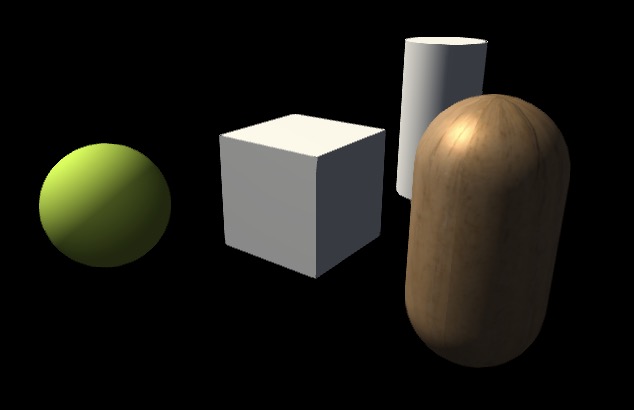

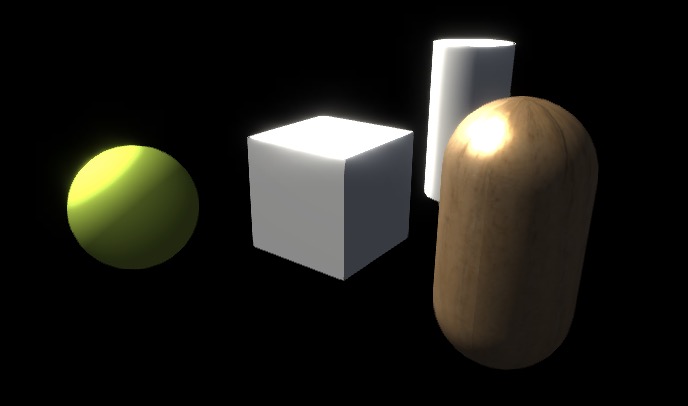

After setting the scene light source intensity to 2.0:

Before opening Opening (exposure=1.0) Opening (exposure=0.5)

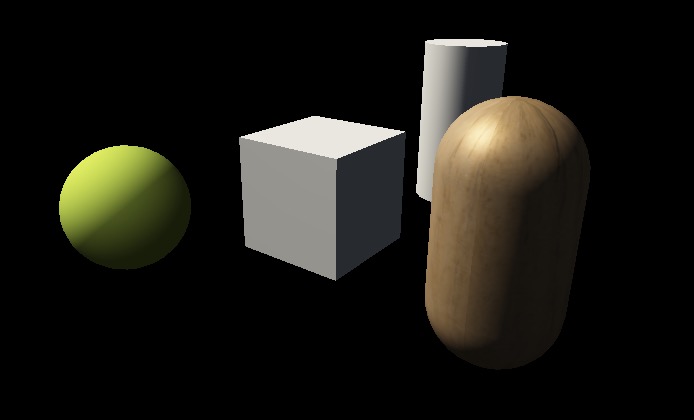

# ▌RGFXAAPostProcessNode

Quickly approximate the anti-aliasing node, and output after anti-aliasing the input texture sourceTex.

Before opening After opening

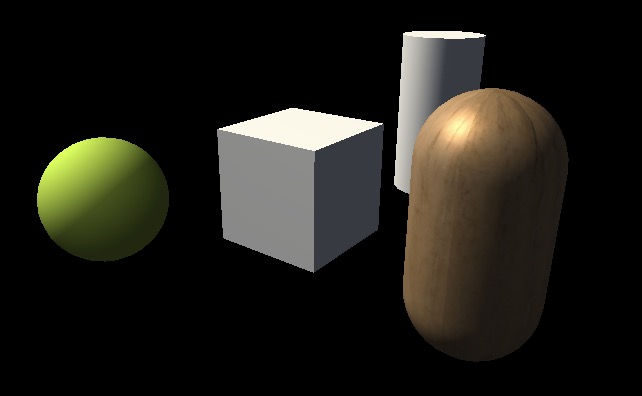

# ▌RGBloomPostProcessNode

Floodlight node, the input texture sourceTex through adjusted parameters to make floodlight output.

Before opening After opening

uniforms.setUniform("u_lightMap", BuildInTextures.black._getengineTexture()!);

uniforms.setUniform("u_speCube", settings.mainEnvironmentMap._getengineTexture()!);

uniforms.setUniform("u_environmentMap", settings.mainPanoramaMap._getengineTexture()!);

uniforms.setUniform('u_csmFarBounds', [0, 0, 0, 0]);

uniforms.setUniform('u_SH', settings.shCoefficients);

this._globalUniforms.uiUniforms?.copy(uniforms);

const faUniforms = this._globalUniforms.faUniforms!;

tempArr4[0] = settings.fogMode;

tempArr4[1] = settings.fogStart;

tempArr4[2] = settings.fogStart + settings.fogRange;

tempArr4[3] = settings.fogDensity;

faUniforms.setUniform("u_fogInfos", tempArr4);

faUniforms.setUniform("u_fogColor", settings.fogColor._raw);

tempArr1[0] = this.game.gameTime;

faUniforms.setUniform("u_gameTime", tempArr1);

// Register the default shadow Effect

if (!this._shadowFallbackRegistered) {

const scEffect = engine.loader.getAsset<Effect>('System::Effect::ShadowCasterFallBack');

if (scEffect) {

renderEnv.registerFallbackEffect('ShadowCaster', scEffect);

this._shadowFallbackRegistered = true;

}

}

}

// After the processing of all cameras is over, clear the dirty list of UICanvas inside the current game, vertex dirty bits and material dirty bits, and the dirty list itself public onExecuteDone(context: RenderSystem) { const uiCanvas = this.game!.rootUICanvas; if (uiCanvas._vertexDirtyList.length> 0) { for (let i = 0; i <uiCanvas._vertexDirtyList.length; i++) { const dirtyRenderable2d = uiCanvas._vertexDirtyList.data[i]; if (dirtyRenderable2d._vertexDirtyUpdateFlag) { // Clean up dirty vertices dirtyRenderable2d._vertexDirtyUpdateFlag = false; } } uiCanvas._vertexDirtyList.reset(); } if (uiCanvas._materialDirtyList.length> 0) { for (let i = 0; i <uiCanvas._materialDirtyList.length; i++) { const dirtyRenderable2d = uiCanvas._materialDirtyList.data[i]; if (dirtyRenderable2d._materialDirtyUpdateFlag) { // Clean up dirty material dirtyRenderable2d._materialDirtyUpdateFlag = false; } } uiCanvas._materialDirtyList.reset(); } if (uiCanvas._designResolutionDirty) { uiCanvas._designResolutionDirty = false; } }

public onDisable(context: RenderSystem) { // Unregister the default shadow Effect this._shadowFallbackRegistered && renderEnv.unregisterFallbackEffect('ShadowCaster'); } }

And the realization of the subgraph is:

```ts

export interface IForwardBaseSubGraphOptions {

// camera

camera: BaseCamera;

// Global Uniform in the ForwardBase stage

fbUniforms: engine.UniformBlock;

// Global Uniform in the ForwardAdd phase

faUniforms: engine.UniformBlock;

// Global Uniform in the UI stage

uiUniforms?: engine.UniformBlock;

// Global Uniform in the shadow phase

scUniforms?: engine.UniformBlock;

// #if defined(EDITOR)

rcUniforms?: engine.UniformBlock;

// #endif

}

/**

* The default sub-image of the built-in forward rendering pipeline is generated for each camera.

* Actually contains the Forward Add part.

*/

export default class ForwardBaseSubGraph extends RenderGraph<IForwardBaseSubGraphOptions> {

// View used to clear the screen by default

protected _defaultClearView?: engine.View;

public onActive(context: RenderSystem, options: IForwardBaseSubGraphOptions) {

this._rebuildRG(options);

}

// Create the whole picture by `cameras`, the camera list has been sorted

protected _rebuildRG(options: IForwardBaseSubGraphOptions) {

// First clear the entire picture

this._clear();

const {camera} = options;

if (camera instanceof UICamera) {

// If it is a UI camera, create a sub-image of the UI camera

const cameraNode = this.createNode<RGCameraNode>(`camera-${camera.entity.name}`, RGCameraNode, {camera});

const clearNode = this.createNode<RGClearNode>('ui-clear', RGClearNode, {});

const uiNode = this.createNode<RGUIPrepareNode>('ui-prepare', RGUIPrepareNode, {camera});

const uiRTNode = this.createNode<RGGenRenderTargetNode>('render-target', RGGenRenderTargetNode, {createRenderTarget: () => this._createRT(camera)});

const uiRenderNode = this.createNode<RGRenderNode>('ui-render', RGRenderNode, {

lightMode:'ForwardBase',

createUniformBlocks: () => [options.uiUniforms!]

});

this.connect(cameraNode, uiRenderNode,'camera');

this.connect(cameraNode, clearNode,'camera');

this.connect(uiRTNode, clearNode,'renderTarget');

this.connect(uiRTNode, uiRenderNode,'renderTarget');

this.connect(clearNode, uiRenderNode);

this.connect(uiNode, uiRenderNode,'meshList');

return;

}

this._buildNodesForCamera(camera as Camera, options)

}

// Will return `lastNode`, which is used to connect each subgraph to ensure the order

protected _buildNodesForCamera(camera: Camera, options: IForwardBaseSubGraphOptions) {

// The following is the forwardbase node creation part

let rtNode!: RGGenRenderTargetNode;

if (camera.postProcess) {

rtNode = this.createNode<RGGenRenderTargetNode>('render-target', RGGenRenderTargetNode, {createRenderTarget: () => this._createPostProcessRT(camera, camera.postProcess?.hdr || false)});

} else {

rtNode = this.createNode<RGGenRenderTargetNode>('render-target', RGGenRenderTargetNode, {createRenderTarget: () => this._createRT(camera)});

}

const cameraNode = this.createNode<RGCameraNode>(`camera-${camera.entity.name}`, RGCameraNode, {camera});

const fwCullNode = this.createNode<RGCullNode>('fb-cull', RGCullNode, {lightMode:'ForwardBase'});

const fwClearNode = this.createNode<RGClearNode>('fb-clear', RGClearNode, {});

const fwRenderNode = this.createNode<RGRenderNode<{shadowMap:'RenderTarget'}>>('fb-render', RGRenderNode, {

lightMode:'ForwardBase',

createUniformBlocks: () => [options.fbUniforms!],

inputTypes: {'shadowMap':'RenderTarget'},

uniformsMap: {

u_shadowMapTex: {

inputKey:'shadowMap',

name:'color'

}

}

});

// The following is the connection part of the Skybox node

if (camera.drawSkybox) {

const skyBoxNode = this.createNode<RGSkyBoxNode>('skybox', RGSkyBoxNode, {});

const skyBoxRenderNode = this.createNode<RGLightNode>('skybox-render', RGRenderNode, {

lightMode:'Skybox',

createUniformBlocks: () => [options.fbUniforms!]

});

this.connect(cameraNode, skyBoxRenderNode,'camera');

this.connect(skyBoxNode, skyBoxRenderNode,'meshList');

this.connect(rtNode, skyBoxRenderNode,'renderTarget');

this.connect(skyBoxRenderNode, fwRenderNode);

}

// The following is the connection part of the shadow rendering node

if (camera.shadowMode !== engine.EShadowMode.None) {

const scRTNode = this.createNode<RGGenRenderTargetNode>('sc-render-target', RGGenRenderTargetNode, {createRenderTarget: () => this._createRT(camera,'ShadowCaster')});

const scRenderNode = this.createNode<RGLightNode>('shadow-caster', RGLightNode, {

lightMode:'ShadowCaster',

createUniformBlocks: () => [options.scUniforms!]

});

this.connect(cameraNode, scRenderNode,'camera');

this.connect(scRTNode, scRenderNode,'renderTarget');

this.connect(fwCullNode, scRenderNode,'meshList');

this.connect(scRenderNode, fwRenderNode,'shadowMap');

}

// The following is the connection part of the ForwardBase node

this.connect(cameraNode, fwCullNode,'camera');

this.connect(cameraNode, fwRenderNode,'camera');

this.connect(rtNode, fwClearNode,'renderTarget');

this.connect(cameraNode, fwClearNode,'camera');

this.connect(rtNode, fwRenderNode,'renderTarget');

this.connect(fwClearNode, fwRenderNode);

this.connect(fwCullNode, fwRenderNode,'meshList');

// The following is the connection part of the ForwardAdd node

const faCullLightNode = this.createNode<RGCullLightFANode>('fa-cull-light', RGCullLightFANode, {});

const faCullMeshNode = this.createNode<RGCullMeshFANode>('fa-cull-mesh', RGCullMeshFANode, {});

const faRenderNode = this.createNode<RGFARenderNode>('fa-render', RGFARenderNode, {

lightMode:'ForwardAdd',

createUniformBlocks: () => [options.faUniforms!],

});

this.connect(fwCullNode, faCullLightNode,'meshList');

this.connect(fwCullNode, faCullMeshNode,'meshList');

this.connect(faCullLightNode, faCullMeshNode);

this.connect(fwRenderNode, faRenderNode);

this.connect(cameraNode, faRenderNode,'camera');

this.connect(rtNode, faRenderNode,'renderTarget');

this.connect(faCullMeshNode, faRenderNode,'meshList');

this.connect(faCullLightNode, faRenderNode,'lightList');

// The following is the post-processing node creation connection part

let finalBlitNode!: RGBlitPostProcessNode;

if (camera.postProcess) {

let preNode: RGNode<any, any, any> = rtNode;

const {hdr, steps} = camera.postProcess.getData();

steps.forEach((step, index) => {

let currentNode: RGNode<any, any, any>;

switch (step.type) {

case EBuiltinPostProcess.BLIT:

currentNode = this.createNode('post-process-blit', RGBlitPostProcessNode, {hdr, ...step.data});

break;

case EBuiltinPostProcess.BLOOM:

currentNode = this.createNode('post-process-bloom', RGBloomPostProcessNode, {hdr, ...step.data});

break;

case EBuiltinPostProcess.FXAA:

currentNode = this.createNode('post-process-fxaa', RGFXAAPostProcessNode, {hdr, ...step.data});

break;

case EBuiltinPostProcess.HDR:

currentNode = this.createNode('post-process-tone', RGHDRPostProcessNode, {hdr, ...step.data});

break;

}

this.connect(preNode, currentNode,'sourceTex');

index === 0 && this.connect(faRenderNode, currentNode);

preNode = currentNode;

});

finalBlitNode = this.createNode('final-blit', RGBlitPostProcessNode, {dstTex: this._createRT(camera)});

this.connect(preNode, finalBlitNode,'sourceTex');

}

// The following is the connection part of the Gizmo node

if (camera.drawGizmo) {

const gizmoNode = this.createNode<RGGizmosNode>('gizmo', RGGizmosNode, {});

const gizmoRenderNode = this.createNode<RGRenderNode>('gizmo-render', RGRenderNode, {

lightMode:'ForwardBase',

createUniformBlocks: () => [options.fbUniforms!],

});

const rect = camera.viewport;

const x = rect? rect.xMin: 0;const y = rect? rect.yMin: 0;

const width = rect? rect.width: 1;

const height = rect? rect.height: 1;

const viewPortRect: engine.IRect = {x, y, w: width, h: height};

const scissorRect: engine.IRect = {x, y, w: width, h: height};

const gizmoView = new engine.View({

passAction: {

depthAction: engine.ELoadAction.CLEAR,

clearDepth: 1,

colorAction: engine.ELoadAction.LOAD,

stencilAction: engine.ELoadAction.LOAD,

},

viewport: viewPortRect,

scissor: scissorRect

})

// Before drawing the gizmo, clear the depth to ensure that the gizmo is drawn at the top

const gizmoClearDepthNode = this.createNode<RGClearNode>('gizmo-clear-depth', RGClearNode, {})

const gizmoViewNode = this.createNode<RGGenViewNode>('gizmo-view', RGGenViewNode, {viewObject: {view: gizmoView} })

this.connect(rtNode, gizmoClearDepthNode,'renderTarget');

this.connect(finalBlitNode || faRenderNode, gizmoViewNode);

this.connect(gizmoViewNode, gizmoClearDepthNode,'camera')

this.connect(gizmoClearDepthNode, gizmoRenderNode);

this.connect(cameraNode, gizmoNode,'camera');

this.connect(cameraNode, gizmoRenderNode,'camera');

this.connect(rtNode, gizmoRenderNode,'renderTarget');

this.connect(gizmoNode, gizmoRenderNode,'meshList');

}

}

// Create RenderTarget by camera and lightMode

protected _createRT(camera: BaseCamera, lightMode: string ='ForwardBase') {

let fbRT: RenderTexture | Screen | null = null;

if (camera.renderTarget) {

fbRT = camera.renderTarget;

} else {

// Both the simulator and ide need this logic, so no define editor is added

if (camera.editorRenderTarget) {

fbRT = camera.editorRenderTarget

} else {

fbRT = this.context.screen;

}

}

if (lightMode ==='ShadowCaster') {

// If it is a shadow process, create a RenderTexture

return new RenderTexture({width: 2048, height: 2048});

}

return fbRT;

}

protected _createPostProcessRT(camera: BaseCamera, hdr: boolean): RenderTexture {

const origRT = camera.renderTarget || this.context.screen;

const {width, height} = origRT;

return new RenderTexture({width, height, colors: [{

pixelFormat: hdr? engine.ETextureFormat.RGBA16F: engine.ETextureFormat.RGBA8

}]});

}

}

# Debug pipeline

The debugging pipeline DebugRG is specifically used to debug whether the current rendering environment is normal. It is very simple to use:

// The `texture` in the initialization parameters can be changed, the default is green here

const debugRG = new engine.DebugRG({texture: engine.BuildInTextures.green});

game.renderSystem.useRenderGraph(debugRG);

If a quarter of a green patch is displayed on the screen, it means that the rendering system is working properly.