# Deep estimates

VisionKit provides depth estimation capabilities.

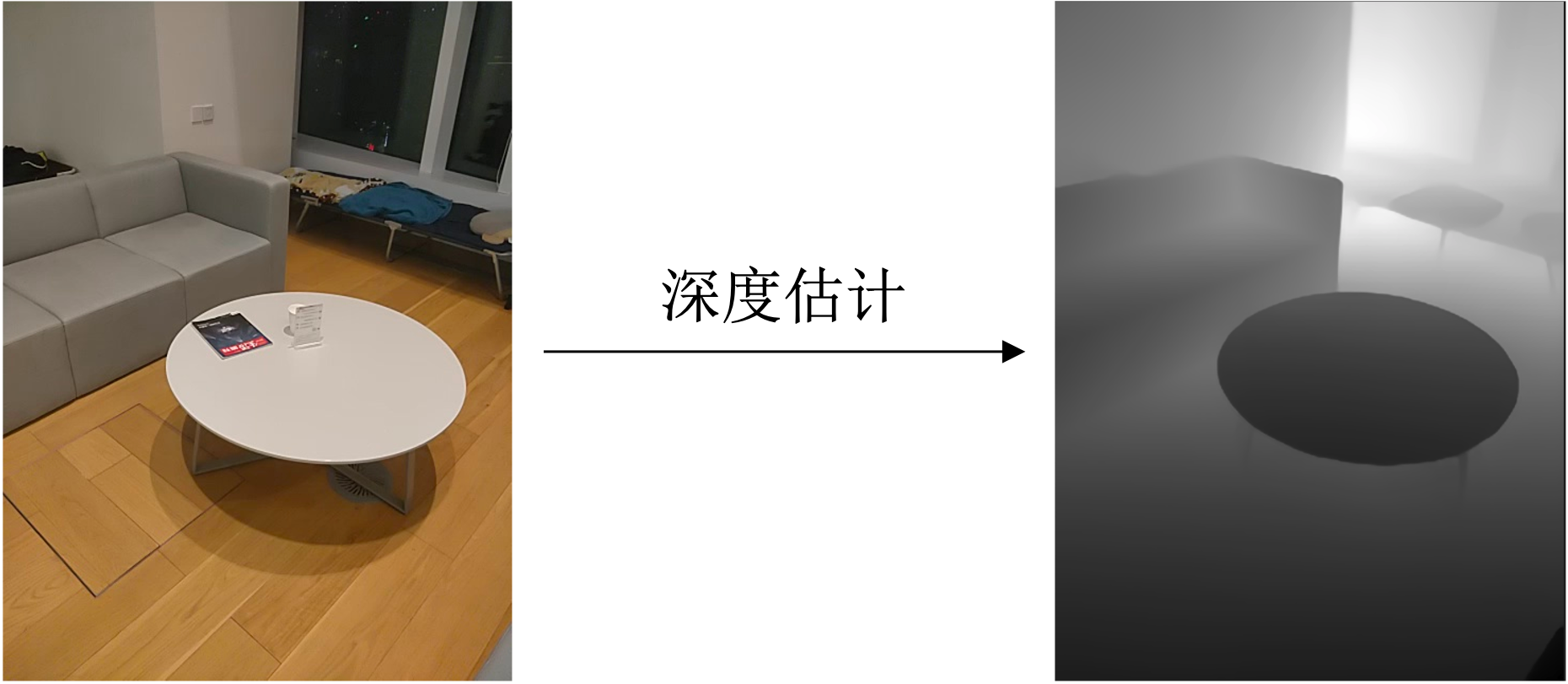

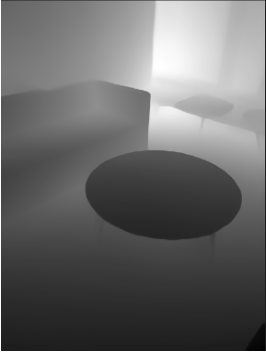

Depth estimation is the acquisition of information about the distance from each point in an image to the camera. This information makes up a graph called a depth graph.

# Method Definition

Here we provide two models for deep estimation:

Visual mode : (Android WeChat > = 8.0.37, iOS WeChat > = 8.0.38 onwards) Depth estimation using a camera.

AR mode : (Android WeChat > = 8.0.38 with base library > 2.33.0, iOS WeChat > 8.0.39 with base library < 3.0.0) Using cameras and IMUs for depth estimation,The output is a nonlinear depth chart controlled by the Near and Far for false overlays. Some models are currently supported, and the list of support models is equivalent to 6Dof-horizontal AR-V2 flat AR interface support list .

| Interface Type | accuracy | Model coverage |

|---|---|---|

| Visual patterns | in | high |

| AR mode | high | in |

# Visual Mode Interface

Depth Estimation The visual mode interface provides two ways to perform a depth estimation by inputting a static image and a real-time depth estimations via the camera.

- Static image estimation

Input an image through the VKSession.detectDepth interface ,Then the depth chart information is listened to through the VKSession.on interface. The value of the pixels in the diagram represents the current depth value, the darker the color, the closer the camera, and the whiter the color, which represents the farther the depth.

Example code:

const session = wx.createVKSession({

track: {

depth: {

mode: 2 // mode: 1 - 使用摄像头;2 - 手动传入图像

},

},

gl: this.gl,

})

// In static image estimation mode, an updateAnchors event is triggered every time the detectDepth interface is tuned

session.on('updateAnchors', anchors => {

anchors.forEach(anchor => {

console.log('anchor.depthArray', anchor.depthArray) // 深度图 ArrayBuffer 数据

console.log('anchor.size', anchor.size) // 深度图大小,结果为数组[宽, 高]

})

})

// You need to call start once to start

session.start(errno => {

if (errno) {

// 如果失败,将返回 errno

} else {

// 否则,返回null,表示成功

session.detectDepth({

frameBuffer, // 待检测图片的ArrayBuffer 数据。待检测的深度图像RGBA数据,

width, // 图像宽度

height, // 图像高度

})

}

})

- Real-time estimates via camera

The algorithm outputs a depth chart of the current frame in real time, and the value of each pixel represents the current depth value, the darker the color, the closer the camera, and the whiter the color, which represents the farther the distance.

Example code:

const session = wx.createVKSession({

track: {

depth: {

mode: 1 // mode: 1 - 使用摄像头;2 - 手动传入图像

},

},

})

// You need to call start once to start

session.start(errno => {

if (errno) {

// 如果失败,将返回 errno

} else {

// 获取每一帧的信息

const frame = session.getVKFrame(canvas.width, canvas.height)

// 获取每帧的深度图信息

const depthBufferRes = frame.getDepthBuffer();

const depthBuffer = new Float32Array(depthBufferRes.DepthAddress)

//创建渲染逻辑, 将数组值传输到一张纹理上,并渲染到屏幕

render()

}

})

# AR mode interface

See V2 + Virtual and Real Occlusion in Horizontal AR

# Dxplaination of estimating output in real time

# 1. Effects display

# 2. Critical points for depth estimation

Deep real-time key point output fields include

struct anchor

{

DepthAddress, // 深度图ArrayBuffer的地址, 用法如new Float32Array(depthBufferRes.DepthAddress);

width, // 返回深度图的宽

height, // 返回深度图的高

}

# Example of application scenarios

- The special effects scene.

- AR games and applications (the following is an example of AR virtual and real occlusion).

# Program Examples

Visual Mode:

- Real-time depth estimation capabilities use reference

- Static image depth estimation capabilities using reference

AR mode:

# Demo Experience

Visual Mode:

- Real-time depth estimation capabilities, experienced in the interface - VisionKit Vision Capabilities - Real-time depth map detection in the Weixin Mini Program example.

- Photo depth estimation capabilities, experienced in the interface - VisionKit Visual Capabilities - Photo depth map detection in the Weixin Mini Program example.

AR mode:

- Experience it in the interface - VisionKit visual ability - horizontal AR-v2 - virtual occlusion in the Weixin Mini Program example.