# Critical Point Detection in the Face

VisionKit from the base library version 2.25.0 (Android WeChat > = 8.0.25,IOS WeChat > 8.0.24 started providing face key point detection as a parallel capability interface withmarker capabilityandOSD capability.

Face 3D keypoint detection is provided from version 8.1.0 as an extensible interface for face 2D keypoint detection.

# Method Definition

There are two ways to detect face key points, one is to enter a static image for detection, and the other is to detect it in real time through the camera.

# 1. Static Image Detection

Via VKSession.detectFace interface Inputting an image, the algorithm detects the face in the image, and then through VKSession. On ] outputs the face position, 106 key points and the angle of swing of the face.

Example code:

const session = wx.createVKSession({

track: {

face: { mode: 2 } // mode: 1 - 使用摄像头;2 - 手动传入图像

},

})

// In static image detection mode, the detectFace interface triggers an updateAnchors event every time the detectFace interface is adjusted.

session.on('updateAnchors', anchors => {

anchors.forEach(anchor => {

console.log('anchor.points', anchor.points)

console.log('anchor.origin', anchor.origin)

console.log('anchor.size', anchor.size)

console.log('anchor.angle', anchor.angle)

})

})

// You need to call start once to start

session.start(errno => {

if (errno) {

// 如果失败,将返回 errno

} else {

// 否则,返回null,表示成功

session.detectFace({

frameBuffer, // 图片 ArrayBuffer 数据。人脸图像像素点数据,每四项表示一个像素点的 RGBA

width, // 图像宽度

height, // 图像高度

scoreThreshold: 0.5, // 评分阈值

sourceType: 1,

modelMode: 1,

})

}

})

# 2. Detection via camera in real time

](https://developers.weixin.qq.com/miniprogram/dev/api/ai/visionkit/VKSession.on.html) and the angle of swing of the face in the 3D coordinate system.

Example code:

const session = wx.createVKSession({

track: {

face: { mode: 1 } // mode: 1 - 使用摄像头;2 - 手动传入图像

},

})

// When a face is detected, the updateAnchors event is triggered continuously (once per frame) in the camera real-time detection mode.

session.on('updateAnchors', anchors => {

anchors.forEach(anchor => {

console.log('anchor.points', anchor.points)

console.log('anchor.origin', anchor.origin)

console.log('anchor.size', anchor.size)

console.log('anchor.angle', anchor.angle)

})

})

// The removeAnchors event is triggered when the face leaves the camera

session.on('removeAnchors', () => {

console.log('removeAnchors')

})

// You need to call start once to start

session.start(errno => {

if (errno) {

// 如果失败,将返回 errno

} else {

// 否则,返回null,表示成功

}

})

# 3. Turn on 3D keypoint detection

To enable face 3D keypoint detection, static picture mode only needs to add theopen3dfield to the 2D call, as follows

// Static Picture Mode calls

session.detectFace({

..., // 同2D调用参数

open3d: true, // 开启人脸3D关键点检测能力,默认为false

})

The real-time mode adds a 3D switch update function to the 2D call, as follows

// Camera calls in real-time mode

session.on('updateAnchors', anchors => {

this.session.update3DMode({open3d: true}) // 开启人脸3D关键点检测能力,默认为false

..., // 同2D调用参数

})

# Output instructions

# 1. Location Definition

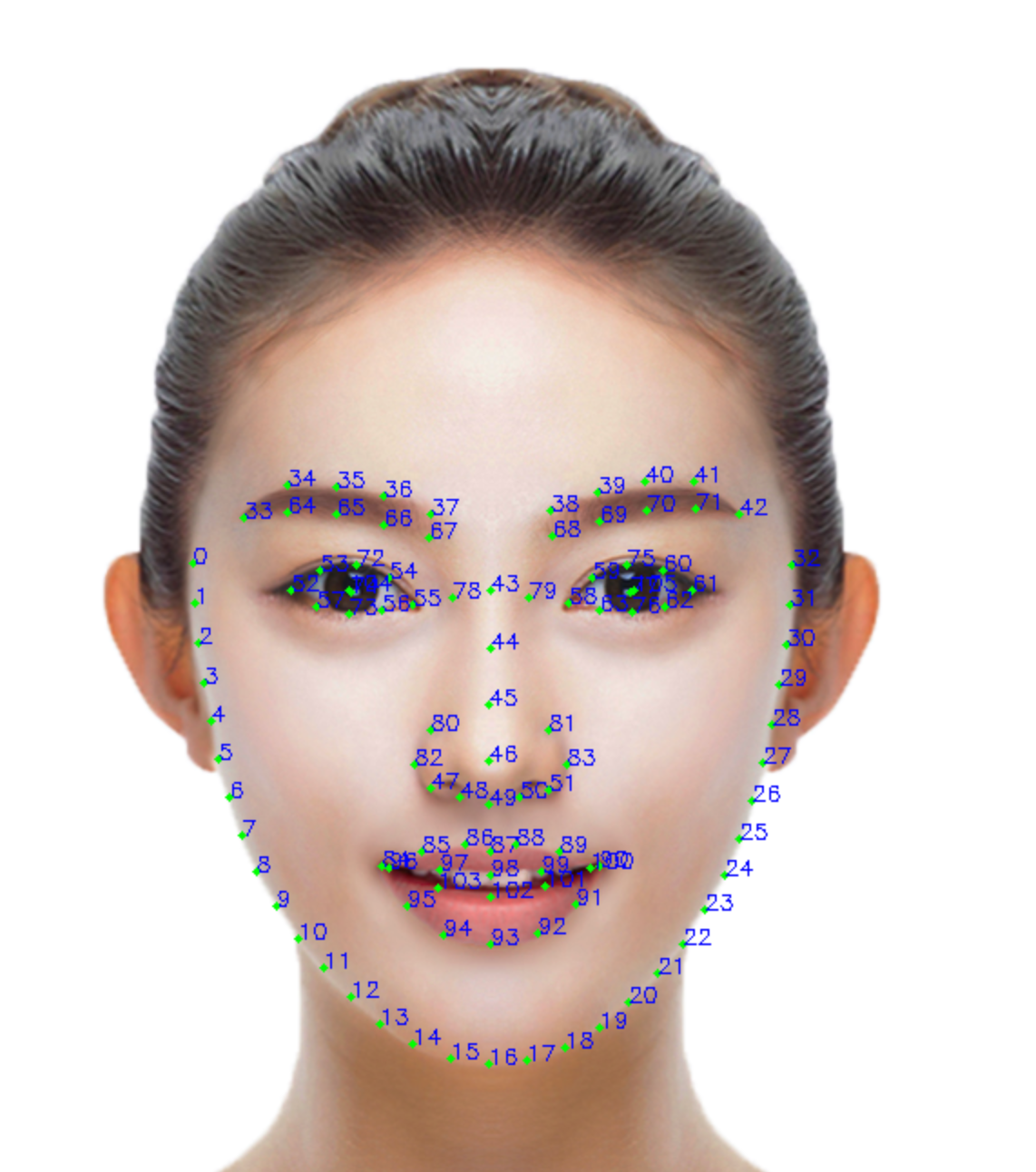

The 2D key point of the face and the 3D key point of the face are 106 points, defined as shown in the figure below. When the face pose changes, the contour points of the face 2D key points will always follow the visible face edge, while the face 3D key points will maintain the three-dimensional structure.

# 2. Face 2D Key Points

The face 2 D key point output field includes

struct anchor

{

points, // 106点在图像中的(x,y)坐标

origin, // 人脸框的左上角(x,y)坐标

size, // 人脸框的宽和高(w,h)

angle, // 人脸角度信息(pitch, yaw, roll)

confidence // 人脸关键点的置信度

}

# 3. Face 3D Key Points

After the face 3D key point detection capability is turned on, the face 2D and 3D key point information can be obtained.

struct anchor

{

..., // 人脸关键点2D输出信息

points3d, // 人脸106点的(x,y,z)3D坐标

camExtArray, // 相机外参矩阵,定义为[R, T \\ 0^3 , 1], 使用相机内外参矩阵可将3D点位投影回图像

ccamIntArray // 相机内参矩阵,参考glm::perspective(fov, width / height, near, far);

}

# Example of application scenarios

- Face testing.

- A face special effect.

- Estimates of face posture.

- Face AR game.

# Program Examples

- Use of reference for real-time camera face detection capabilities

- Static image face detection ability to use reference

# Special Notes

If Weixin Mini Program face recognition functions involve collecting and storing biometric characteristics of users (such as face photos or videos, identity cards and handheld ID cards, identity card photos and faceless photos, etc.), this type of service requires the WeChat native face recognition interface